At Millicast / CoSMo we have been working on AV1 for a long time and implemented all our work in the Medooze Media Server.:

And another world first for CoSMo and @medooze . The first ever #AV1 call in #webrtc (with RTP encapsulation) via SFU 😂 cc/ @agouaillard pic.twitter.com/h2i4U7FkQ5

— Sergio Garcia Murillo (@murillo) October 31, 2018

Real time AV1 encoding has been available in libWebRTC for almost a year now and the recent announcement of Real Time AV1 encoding being enabled by default in Chrome source code and later in Chrome Canary and nightly builds is a great new for everyone using Real-Time Communication, and WebRTC in particular.

For CoSMo it is a great moment representing the pinnacle of years of work on the AV1 RTP Payload specification, Real Time AV1 encoder integration in libwebrtc and Chrome, as well as on the Medooze reference SFU and KITE reference test suite implementations.

Dr. Alex made a great blog post covering the history of what has bring us here, the vision forward for WebRTC and and what you should be expecting in terms of performance from Real-Time AV1 encoding in WebRTC and Chrome. As outlined in the article, there is still a missing piece to truly unleash the AV1 power in Chrome: SVC support via the Scalable Video Coding (SVC) Extension for WebRTC https://www.w3.org/TR/webrtc-svc/.

Chrome did support partial SVC (only temporal scalability) for VP8 and VP9 in Chrome via the W3C SVC extension, more specifically, L1T2 and L1T3 modes with only one spatial layer but 2 and 3 temporal layers were available as an experimental web platform feature behind a flag. Spatial scalability support for VP9 was also supported via a Field Trial or by doing SDP mungling (adding one extra ssrc per spatial layer).

AV1 codec specification provides a wider range of SVC scalability modes out of the box and libwebrtc implements a subset of them(L1T2 L1T3 L1T2 L1T3 L2T1 L2T1h L2T1_KEY L2T2 L2T2_KEY L2T2_KEY_SHIFT L3T1 L3T3 L3T3_KEY and S2T1) which allows to choose different degrees of scalability, robustness, bitrate and cpu overhead.

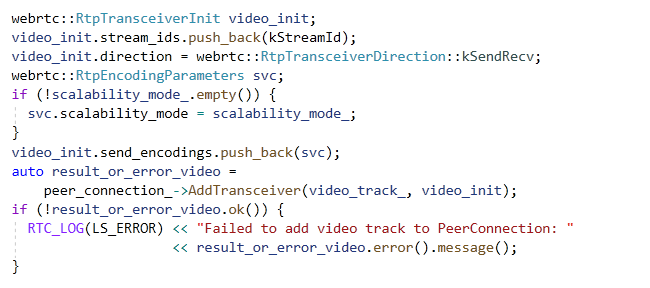

AV1 SVC is not yet fully testable in the Chrome though, at least until this patch gets merged. Never mind, one can already get familiar with real-time AV1 SVC using libwebrtc in native applications:

AV1 SVC Dependency Descriptor

Several years ago I published in collaboration with Gustavo Garcia a blog post about VP9 SVC layering and how to use it in SFUs.

In order to perform VP9 SVC layer selection in SFUs, the information required by the SFU was carried inside the VP9 Payload Descriptor in a compact form. While two modes of operation were supported at that time, the flexible mode and the non-flexible mode, it seemed that the non-flexible mode, being easier to implement, would cover most of the use cases for SVC.

Fast forward to the present day, the usage of more advanced SVC modes, especially the K-SVC or “S” modes (in which each spatial layer is independently encoded so there is inter-layer dependency, providing advantages in terms of smoothness of bandwidth usage) and Shifted Temporal Prediction Structures (which lowers the bandwidth usage spikes, and corresponding potential congestion resulting from it, especially when sending I-Frames) can’t be represented using the non-flexible mode and have made VP9 SVC implementations much harder as it is required to parse and process the dependency structure or hardcode the SFU behaviour based on current libwebrtc implementation.

A similar issue has happened trying to standardize the RTP Framarking header extension in an attempt to provide access to the video information required to perform SFU operations without having to parse the codec dependant RTP payload format.

The AV1 RTP specification tries to solve this issue by providing all information required by the SFU operations in a codec agnostic way. Introducing the following key concepts:

- Decode targets, which is the subset of Frames needed to decode a coded video sequence at a given spatial and temporal layer.

- Chains, which are the minimal sequence of Frames that must be received/forwarded so the stream remains decodable without further recovery mechanism required.

The AV1 specification defines a new RTP header extension to transmit this metadata information in each RTP packet which is called the Dependency Descriptor.

While the Frame Marking specification was designed for the specific use case of encrypted payload, there are a lot of advantages to put in RTP headers information that helps SFUs be better at what they do. By moving the metadata to the header extension, SFUs does not need to parse the AV1 OBU payloads, allowing them to be perform better and reduce delay.

When the media is end to end encrypted, and the RTP payload opaque, SFUs can still operate solely based on the Dependency descriptor or the rest of the unencrypted headers.

The Dependency Descriptor provides the specific metadata information that will allow the SFU to decide immediately on receiving a packet whether to forward it to one or more downstream endpoints or which recovery mechanism must be performed to recover the stream decodability:

- The RTP packet belongs to a frame which is required for the currently selected Decode target. This is provided by the Decode Target Indication (DTI) values: Not present, Discardable, Switch and Required.

- All frames referenced by the current frame have been forwarded to the endpoint. This is provided by the specifying the frame number (as differential relative value) of the previous frames which this frame reference.

- All frames referenced by the current frame are decodable. This is provided by Chain information which is present in all packets, allowing an SFM to detect a broken Chain regardless of loss patterns.

By having access this information in packet, the SFU can not only immediately decide if it has to forward the packet to the receiver endpoint for the given selected spatial and layers (i.e. a specific Decode Target), but can also immediately and dynamically decide how to react to packet losses.

Confused already? In order to reduce overhead, the Dependency Descriptor uses templates to avoid sending the same information over and over again, and just reference the id of the template that should be used for the current RTP packet. Also, to allow more flexibility, it is possible to override the template settings and just send some inline information relevant only to the current frame.

The Dependency Descriptor is a bit unusual for what SFU devs are used to, using a bit stream format and requiring to keep some state of previous received packets in order to process current one. This is a bit hard to swallow at first, but trust me, after you go through the 5 stages of grief (like I have done), you will find it is very efficient and convenient. Let me help you through the path without the grief 🙂

Let’s take a look and how this information looks like in the Dependency Descriptor header:

If you are an SFU developers, you might already have reached the second stage of grief: anger.

On a performance note, most of the information is from the Template Dependency Structure, which typically is only sent within I-Frames so the overhead in traffic is almost negligible. Once the Template Dependency Structure is sent, the rest of the RTP packets will look like:

Much easier! There are just two bits indicating the start and end RTP packets of a Frame (remember, several Frames are sent with the same RTP timestamp if spatial scalability is in use), the frame number and the template id that should be used for this Frame. This information takes only 3 bytes of overhead to transport.

If we look at the referenced template it looks like this:

Which means that:

- The frame is from the spatial layer 2 and temporal layer 2.

- The Decode Target Indications shows that it is not used for any Decode Target except the latest one, in which it is descartable. That means that the SFU can discard this packet as it will never be referenced by any future Frame.

- The current frame depends of previous frames with [3,1] difference in frame number, that is, frames #101 and #103. If those frames have not been received/forwarded, the receiver will not be able to decode current one.

- Which is the previous frame in each of the Chains, also in diff format, so [11,10,9] means frame #93 for Chain 0, #94 for Chain 1 and #95 for Chain 2 (more on this later).

In the Template Dependency structure there is also the following information that will be used for layer selection and packet forwarding:

- Decode Target mapping to spatial and temporal layers. This is not explicitly sent in the Dependency Descriptor, but can be obtained by parsing the Decode Target Indications in each Template Dependency Structure (the actual algorithm is in the spec).

- Decode Target protected by Chain information, which indicates for each Decode Target which Chain is used for protecting it. That is, if a Decode Target is being forwarded, which chain do we have to track in order to detect that the stream is not decodable anymore and request an I frame to the encoder or use Layer Refresh Request (LRR) when it is implemented.

- Resolutions for each spatial layer, which is optional anyway.

- Which Decode Targets are active. This is a very important piece of information, as will allow the SFU to immediately detect when a layer has been stopped on the encoding side and can decide to switch to a different one without having to wait for further packets or timeouts. The SFU will also need to modify this information when doing layer selection so the receiver is able to pass the frame to the decoder as soon as possible without having to wait for inactive Decode Targets.

Note that in order to process (and even parse) the information in a Dependency Descriptor header of an RTP packet, the SFU needs to keep the latest Template Structure sent by the sender in the last I Frame.

So, we have a lot of information available for each RTP packet, but how do I actually perform the layer selection and forwarding on the SFU side?

The AV1 specification indicates that the SFU should use the Decode Targets for performing the layer selection. However, there are two main problems when trying to decide which one to forward:

- The WebRTC APIs does not expose the Decode Target information, nor it has any API to directly control Decode Targets. While there is a mapping between the Decode Targets and the temporal and spatial layers, it is possible for two different Decode Targets to be associated with the same spatial and temporal layer (although this doesn’t happen on any of the current scalability modes implemented on libwrtc or specified on the AV1 spec)

- The Decode Target selected for forwarding may be deactivated at any given moment in time, so the algorithm has to be flexible enough to react to it and switch to a different layer, even on K-SVC or “S” modes.

So, for each packet we are going to take the following actions:

- Retrieve the Dependency Descriptor for current RTP packet header extension and the lastest received Template Structure and Active Decode Target mask.

- Check if all the referenced frames for the Frame has been forwarded to decide if the frame is decodable or not.

- Filter out the Decode Targets that are inactive and order them in reverse spatial and temporal layer order.

- Find the first Decode Target which is lower or equal to the desired spatial and temporal layers that we wish to forward and is protected by a Chain that is not broken. That is that all the frames for that Chain have been received and forwarded.

- If there is no Decode Target available with a valid Chain, drop the packet and request an I Frame to the sender.

- Check the Decode Target Indication to see if the Frame is present in the selected Decode Target and drop the packet if it is “Not present”.

- Set the RTP packet mark bit if it is the latest packet of current frame and the forwarded Decode Target spatial and temporal layers are the maximum ones that we are going to forward based on the application settings and the active Decode Target mask.

- For the last packet of the Frame, check if all the packets for the Frame has been forwarded, add it to internal forwarded Frames list.

Note that we are not currently using the “Switch” Decode Target Indication for upswitching between layers as we rely on the referenced Frames and Chains instead.

The Medooze Media Server implements a receiver packet queue to deal with NACKs and retransmissions, so the layer selection algorithm receives the RTP packets in order, and a missing packet is automatically considered lost. As of today, we are not using the Dependency Descriptor content to help managing NACK/RTX behaviour in our media server, in other words, we are sending a NACK for any missing packet. By using the Dependency Descriptor we eventually expect to further reduce latency and bandwidth overhead, as we would only send a NACKs for decoder critical packets.

Other servers which do not implement this queue and forwards RTP packets as they are received would have to modify the layer selectionalgorithm above, being optimistic about packet losses and forward them as they are received and only take recovery actions once the packet has been permanently lost. This would mean that how to check if the Chains are broken or the reference Frames have been forwarded would to be performed sligthly different.

On a last note, remind that the Dependency Descriptor has been designed to be codec agnostic and, in theory, could be reused with any other video codec. So potentially, this layer selection logic and enhancements could be applied to all other existing codecs and have an unified implementation for all of them!

Also, not only the Dependency Descriptor will make SFU and systems based on them more efficient and resilient, it is also fully compatible with E2EE and especially SFrame. It is also compatible with the newly proposed WebRTC HTTP Ingest protocol for streaming platform.