Bitrate Adaptation, one of the great features brought to us by HLS

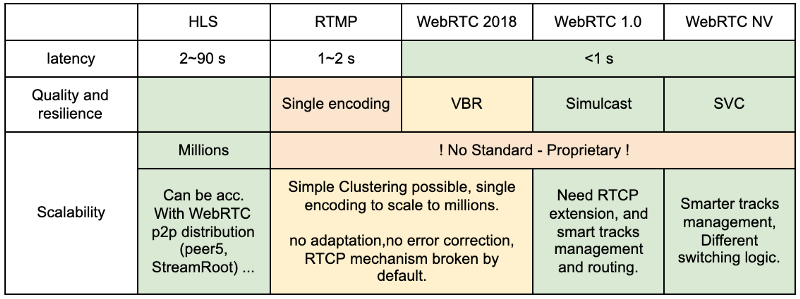

In the streaming world, HLS brought several needed optimizations over RTMP, at the cost of latency. One of them was bitrate adaptation. RTMP needed to have a sustained constant bitrate in most cases to be able to stream, and you wouldn’t be able to change the resolution of the stream without using some kind of media server to re-encode the streams on the fly. If the bandwidth is going below a certain value, streaming was not possible.

When streaming pre-recording content, one has almost an infinite time to deal with everything that is not distribution, including encoding. HLS solved the problem of bandwidth adaptation elegantly, by pre-encoding several resolutions in advance. It then came up with a file-chunks-based distribution mechanism that would allow to switch from one resolution to another, dynamically during the distribution of the content. If the bandwidth is going below a certain value, the resolution of the stream would adapt accordingly.

Thanks to the ubiquity of services like YouTube, Netflix, etc., everybody has experienced today the advantages of changing resolutions during the viewing of a movie, and the better user experience it provides compared to the linear, all-or-nothing experience that previously prevailed.

WebRTC, a more recent technology than HLS, had of course identified adaptive bitrate as a must-have, but chose to implement it in a way that would not induce delays like HLS does.

Delay and latency with respect to chunk sizes

Many have realized these past few years that the only way to reduce the latency in the distribution of media content is to reduce the size of the chunks. The original HLS chunk size was around 10 seconds of media, and a player would traditionally buffer three chunks, bringing the minimum latency by design to 30 seconds. Today, more aggressive settings, which use chunk sizes of 1 second of media for a total latency of 2–6 seconds are pretty common. Going for lower latency with HLS requires some extra magic and starts to flirt with the limit of the protocol, at the cost of interoperability.

Still … WebRTC uses chunks with less than 2ms () of media!

WebRTC took the optimal approach by default by answering the following two questions:

- What is the smallest chunk of media I can send over the network without having too much bandwidth overhead?

- What is the biggest chunk of media I can send over a network without having the internet devices fragmenting it, inducing delay?

For a long time, network people have defined something called a Maximum Transmission Unit, or MTU in short, which is the biggest frame/packet/chunk you can send over TCP or UDP channel without the network appliances needing to fragment it. What practically happens then, is that whatever is sent over the network, the network will fragment it into packets of a maximum of 1,500 Bytes. WebRTC took a conservative approach of using 1,400 Bytes for its packet enforcing a near perfect throughput / latency compromise.

1 MTU has around 1200 Bytes of useful media payload. HD 1080p@30fps encoded with H.264 high profile results in 5000 kbps bandwidth usage, i.e. 640,000 Bytes per second. At that rate 1,200 Bytes represent 1.875 ms.

Effect or RTT and Encoding on User experience

The user experience is also greatly enhanced when reducing the frequency and duration of re-buffering, e.g. needed when changing resolution, especially for fast (or live) content.

For the initial connection establishment, people often use the time it takes between you pushing a button and the media being rendered on your screen, time-to-first-media or TTFM in short, as a protocol-independent metric. Details of the underlying steps for WebRTC can be found in the help pages of callstats.io for example.

By design, an HLS Player needs to fetch the playlist file first (one round-trip) to know which chunks are available for download, and then start downloading the appropriate chunk (another round-trip). There is no difference between the initial establishment (TTFM) and subsequent rebuffering caused by resolution changes.

In WebRTC, the TTFM depends on a mechanism called Interactive Connection Establishment (ICE). Nowadays, the ICE process takes around 100ms, and work is ongoing to try to reduce it to 10ms to bring it in par with the telephony experience.

Then the time it takes for a WebRTC stream to change resolution depends on the encoder.

First and foremost, by default, WebRTC uses adaptive encoders and a variable bitrate. WebRTC can evaluate the available bandwidth, and then apply automated congestion control algorithms to modulate the bitrate without changing the spatial or temporal resolution. A quality factor, named Q (for “quantization”) is used for that. It controls the number of colors used in a frame. By going from, say, 50 shades of grey to 40 shades of grey, you can reduce the bandwidth usage by 20% without impacting the subjective quality. This adaptation is done at the speed of the network (couple of milliseconds).

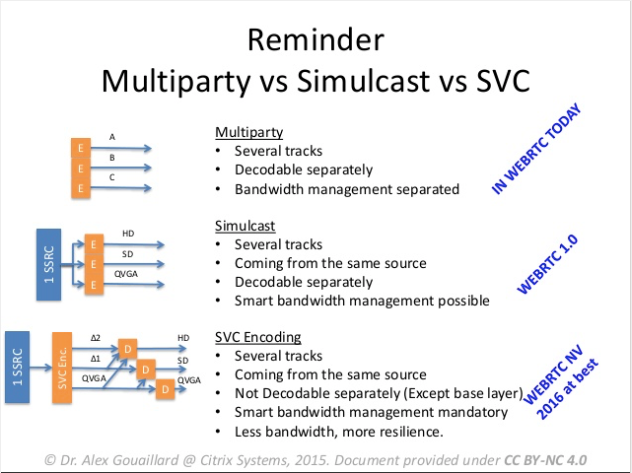

WebRTC 1.0 supports using “simulcast” encoding, where separated encoders are used to encode each spatial resolution separately. In that case, a SFU media server needs to wait for a full frame to be sent by the encoder before it can switch layers. This is usually done in the order of several seconds.

WebRTC NV (Next Version) has provisions for using layered codecs (a.k.a. “SVC”) like H.264 SVC, VP9 SVC, AV1, etc. In that case, the SFU media server can switch layers on the basis of a few UPD/TCP packets, in 1~5 ms. A recent Blog post from Jitsi illustrates the difference it makes when comparing to e.g. Zoom.

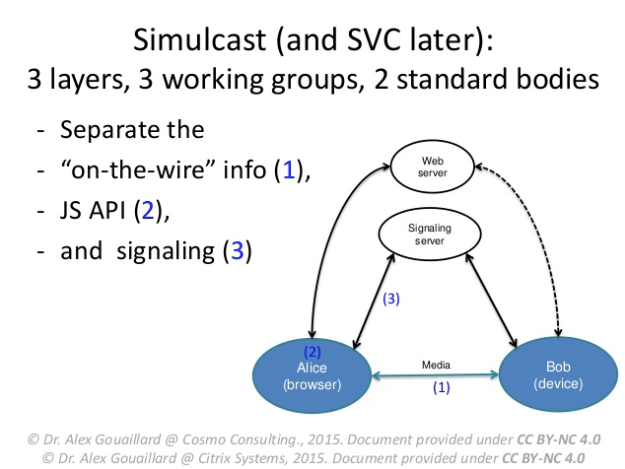

2 SVC was not ready to be made part of WebRTC 1.0, as no SVC codec had an RTP payload specified then, but everybody knew it would come and it was the right way to approach this problem. It is also bringing other advantages in terms of resilience to poor network quality. At the W3C Technical Plenary in Sapporo in 2015, it was decided that Simulcast would be part of 1.0, that the JS API would be SVC ready, but that SVC would not be made mandatory for WebRTC 1.0. It was also decided that only the sender-side part of simulcast would be standardized, in the case where the second peer was an SFU, and specifically that the SFU logic and the layer switching would NOT be standardized and be left to the server or application to implement. A separate W3C specification for SVC JS APIs is available: here.

Browsers Support of WebRTC 1.0 with Simulcast

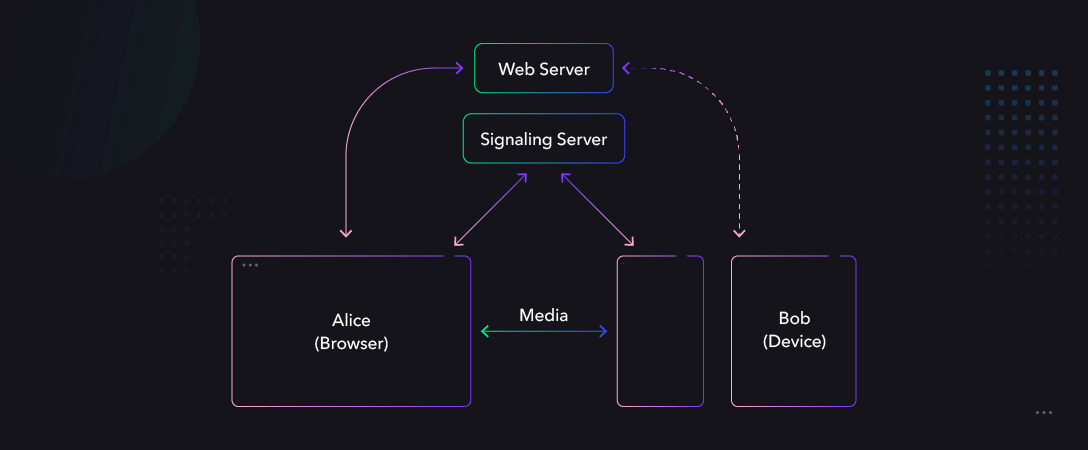

To implement simulcast, browser vendors needed:

A — smart encoders that could have several versions of them encoding in parallel from the same real time source at different resolutions (not represented above)

B — a way to signal during the handshake to the other peer (be it a media server) that simulcast is being sent (#2 and #3 in the drawing).

C — a way to signal in the media itself which track is which, so smart bandwidth allocation, and layer switching can be implemented server-side (#1 in the drawing).

D — Finally, bandwidth estimation and adaptation needs to be improved to take into account the fact that the tracks are not always independent any more, and that when choosing how to allocate bandwidth, dropping the high resolution of a source when lower resolution tracks are available, are now an option.

The details are beyond the scope of this post, however, for the curious, a more in-depth technical post by our CTO Dr Alex can be found on his blog. Suffice to say, 12 months ago, simulcast was impossible to implement across all the browsers. You had to choose between quality (simulcast with chrome and VP8), Safari support, or poor latency (no WebRTC end-to-end). Most browser vendors and SFU developers preferred not to compromise on the quality and latency, and just did not support Safari. iOS and MacOS support was then mainly provided through SDKs.

In April 2018, Millicast’s team then provided an H.264 simulcast implementation into the open Source WebRTC code, and helped Chrome and Safari to integrate it. The Millicast team also provided testing tools to all of the browser vendors through KITE, and specifically to Apple. For the first time, the browser vendors had to test against a SFU, and no standard or reference SFU existed then. As pledged during the Technical Plenary meeting of W3C in October 2018 in Lyon, the Millicast team provided to all a WebRTC 1.0 reference implementation of their Medooze media server to test against. This week, Safari added support for VP8 simulcast as well (see their blog post and a special shout out to Millicast and its mother company CoSMo), so now, all the major browsers implement H.264 and VP8 simulcast. While Millicast already had a simulcast mode at launch thanks to our deep work with browser vendors and standards bodies (August 2018), most of the WebRTC streaming services that exists today cannot do simulcast. Wowza WebRTC implementation is barely out of Beta. It supports ingesting WebRTC, but not delivering WebRTC, and at its core, it is still pretty much RTMP. Red5 has been using the Video Conferencing SFU Jitsi Core to support WebRTC, and Jitsi for historical reasons is behind in simulcast support, and browser support in general. The results of the IETF 104 hackathon in Prague last weekend illustrates this very well. Red5 is not hiding this fact, only listing simulcast for delivery in December 2019 on their own roadmap. Those whose WebRTC service or technology is based on Red5 , like LimeLight Networks, or Ant5, are behind as well.

Scaling WebRTC beyond 1,000 viewers is relatively easy, simple clustering with a couple of relaying nodes can bring you quickly to 1M viewers in the lab. The same techniques that were used for RTMP can be replicated directly, as long as you have a single encoding, in which case you get scalability and low latency, or as long as you only use WebRTC as a ramp-up to your legacy platform, in which case, hum, well, you support sending media from a web page, but you haven’t added anything really special to your platform. In any case, you haven’t improved quality.

However, with simulcast, the stream management becomes much more complicated and cannot be done on a leg by leg basis, or on a media server by media server basis. One needs an orchestration layer which would manage the optimum routing of the correct track to the correct viewers, in real time. Now comes Millicast 2.0, a new version specially tailored to handle millions of concurrent viewers, with Adaptive bitrate, at optimally minimal latency. WebRTC end-to-end with no transcoding, and no writing-to nor reading-from disk ensure that you get the lowest possible, real-time latency. Simulcast makes sure that one gets the best resolution one can handle given one’s network characteristics, and hardware capacity. It works across Chrome, Edgium, Safari and Firefox, desktop and mobile, and native SDKs are also provided by Millicast for those who require more control. Finally, we continue supporting OBS studio, and other usual software.

The Milicast team will be at NAB 2019, contact us to set up a meeting and test Millicast 2.0 for your real-time streaming initiatives.