For broadcasters navigating the intricate landscape of live streaming, understanding and managing latency is pivotal. In a realm where every second counts and viewers’ expectations for seamless experiences are higher than ever, latency can be the make-or-break factor in your broadcast’s success.

In this comprehensive guide, we’re unpacking everything you need to know about latency in live streaming, from the basics of latency and why it’s essential to the fastest low latency streaming protocols available.

- What is low latency?

- Why does latency matter?

- Improving latency

- Understanding use cases for different latency

- Protocols used for low latency

- The future of low latency in live streaming

- Conclusion

For content creators and broadcasters, understanding and optimizing latency is essential to ensuring your audience is immersed and as close to “in the moment” as technically possible.

What is low latency?

At its core, latency is time. It’s the time taken for data to travel from point A to point B. In the context of video streaming, it’s the delay between actions happening in the physical world — like a football being kicked — and those actions being seen on your screen.

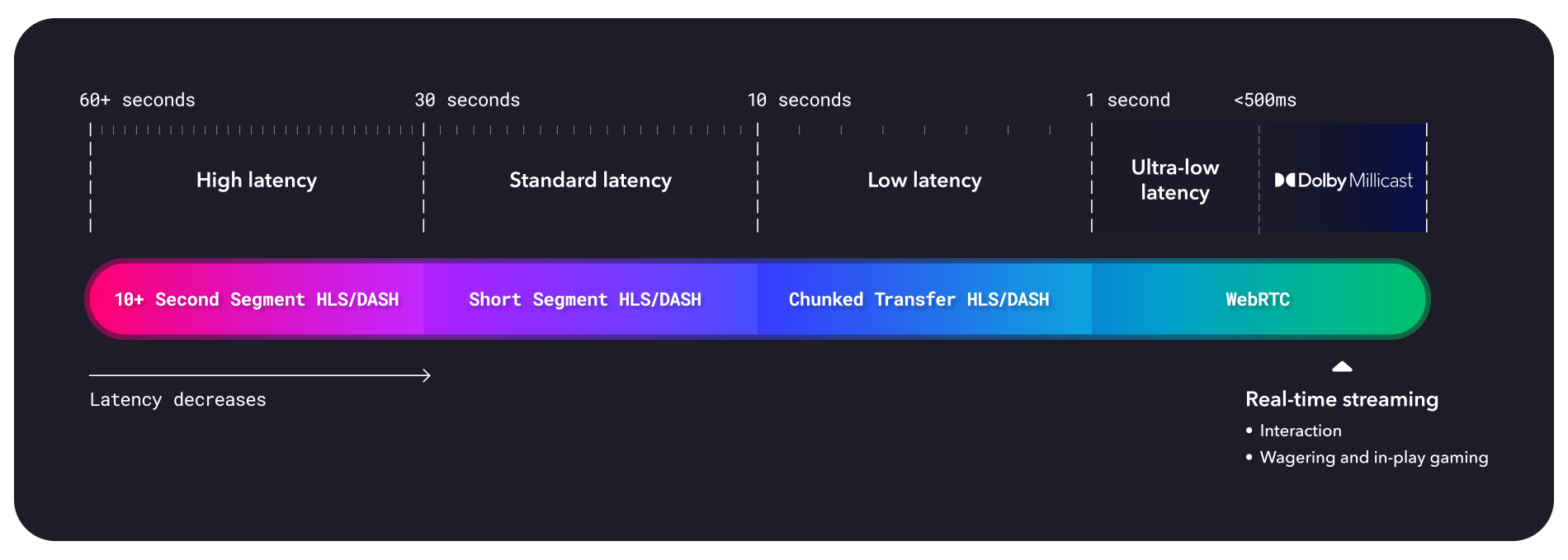

It’s an inherent part of any live streaming setup, but the extent of the latency can vary widely based on technology and configuration. There are typically two categories for low latency and either one can be used depending on the use case. The first is low latency which is a delay of several seconds and is acceptable for most live broadcasts where there is no two-way interaction. The second is ultra-low latency which is a delay of less than a second and is essential for interactive streaming. WebRTC is utilized for real-time streaming in scenarios where latency needs to be under half a second.

To get an idea of scale, consider this — traditional broadcast television can have around an 18-second lag. Today, we measure high-performance streams in single-digit seconds, sometimes fractions of a second, which before seemed the stuff of pure imagination.

Further technical definitions of latency include:

Types of streaming latency

- Glass-to-glass latency represents the entire delay from the capture phase through encoding, transmission, decoding, and rendering of the video on screen.

- Encoding latency is the time it takes to process the raw video and audio data into a format that can be streamed over the internet.

- Network latency is influenced by the distance between the streaming server and the viewer’s device. The longer this distance, the more hops the data has to make, increasing the delay.

- Playback latency is the result of buffering and the capabilities of the viewer’s device.

For broadcasters, understanding the different types of latency is critical in diagnosing the lag and strategizing how to effectively minimize it.

Why does latency matter?

Latency directly influences the viewer’s experience. High latency can lead to spoilers via social media or other channels before the streamed content catches up, diminishing the live aspect of the stream. For interactive streams, such as live Q&As, gaming, or auctions, high latency can disrupt the flow, making real-time engagement problematic.

For live sports, the stakes are sky-high. Immersive sports experiences need to keep pace with stadium cheers and tackles, requiring lightning-quick streams. iGaming and esports demand even more, with ultra-low latency being the difference between a winning move and a missed betting opportunity. In a world where live interactions are becoming primary and expected by viewers, any delay is a detriment to the true experience.

Low latency is significant in live streaming for a multitude of use cases. From live auctions where every millisecond counts to real-time video discussions which rely on immediate feedback, low latency has moved from a ‘nice to have’ to an essential feature.

Improving latency

Reducing latency is paramount for real-time and interactive content. Thankfully, several strategies and technologies can help trim the lag and bring your live video streaming closer to the real-time experience.

Broadcasters can employ several strategies:

1. Use a Content Delivery Network (CDN)

Choosing a CDN which has a wide geographical spread can significantly decrease the distance between the server and the end-user, thus reducing transmission delays.

2. Optimize your encoder settings

Selecting the right codec and reducing the bitrate without compromising video quality can lead to faster processing and delivery times.

3. Leverage protocols designed for low latency

Protocols such as WebRTC (Web Real-Time Communication), can facilitate direct, peer-to-peer connections, minimizing delays inherent in traditional streaming methods.

4. Conduct regular network assessments

Identify and rectify bottlenecks which can help maintain optimal streaming performance.

It’s also critical to test your stream on as many devices and operating systems as possible before the event to identify any potential playback issues. By implementing these techniques, broadcasters can achieve lower latency, providing viewers with a more immediate and engaging live streaming experience.

Understanding use cases for different latency

Depending on the content and context, different use cases will require different latencies to achieve the best balance between real-time interaction and stream quality. Here’s a look at six distinct streaming scenarios and how latency comes into play for each.

Live sports broadcasting

For live sports events, ultra-low latency or real-time streaming is paramount. Fans expect to see plays unfold in real-time, without spoilers from social media or text messages ruining the suspense. A delay of even a few seconds can significantly impact the viewer’s experience. Therefore, ultra-low latency streaming solutions are often employed in this context to ensure that the action is delivered as it happens, keeping viewers engaged and immersed in the game.

Online casinos and live sports betting

In the realm of live casinos and live sports microbetting, latency is a critical factor for both players and spectators. For players, high latency can hinder gameplay, affecting reaction times and competitiveness. For spectators, ultra-low latency is essential to keep the stream as close to live as possible, allowing viewers to react and interact with the content in near-real time. This is especially important for interactive elements such as live chats or polls, where immediate feedback enhances the communal viewing experience.

Corporate webinars and conferences

Corporate webinars and virtual conferences have different latency requirements. Here, a slight delay is often acceptable, as the focus is on clear, uninterrupted delivery of content rather than instant interaction. Standard latency streams are typically used, balancing quality and real-time engagement without the need for the immediacy found in sports or gaming. This allows for higher quality video and audio, ensuring that presentations are professional and impactful.

Live auctions

Live auctions are a unique use case where ultra-low latency is crucial. Bidders need to see and react to the auction in real-time to place their bids effectively. Any significant delay can result in lost opportunities or confusion among participants. Therefore, platforms hosting live auctions prioritize minimizing latency to facilitate a fair and dynamic bidding environment, mirroring the experience of being physically present at an auction.

Live surveillance

In the realm of live surveillance, real-time streaming with minimal latency is not just a preference; it’s an essential requirement. The effectiveness of a surveillance system hinges on its ability to deliver live footage with as little delay as possible. This immediacy allows security personnel or homeowners to react swiftly to any potential threats or incidents. In scenarios where every second counts, such as unauthorized access or emergencies, high latency can compromise the safety and security of people and property.

Live patient monitoring & telehealth

Telehealth, particularly in the context of remote patient monitoring and live consultations, similarly demands real-time streaming capabilities to ensure the highest quality of care. For healthcare professionals, being able to monitor and interact with patients in real-time is crucial for accurately assessing symptoms, providing diagnoses, and making treatment decisions. Delays or interruptions in the video feed can hinder the communication between patients and providers, potentially affecting the outcome of the consultation.

Protocols used for low latency

When comparing traditional streaming protocols like HTTP Live Streaming (HLS) and Dynamic Adaptive Streaming over HTTP (DASH) with real-time alternatives, it’s clear that while HLS and DASH are popular choices for both high and low-latency streaming, they fall short in delivering ultra-low latency experiences.

Streaming over HTTP (DASH) is widespread for high and low latency streaming, but typically not suitable for ultra-low latency. WebRTC and Secure Reliable Transport (SRT), on the other hand, are designed to offer real-time communication, making them ideal choices for ultra-low latency scenarios.

RTMP

RTMP’s legacy as a low-latency protocol is undeniable, but with its limitations in playback support and modern HTML5 compatibility, its role in the streaming ecosystem has shifted.

SRT

SRT has carved a niche for itself in the low-latency landscape, with its strength being high-quality delivery over unstable networks. However, its relatively low support in players out-of-the-box has spurred a need for ingest transcoding.

WebRTC

WebRTC brings the power of real-time communication to the browser without the need for additional plugins like Flash. Its low latency, combined with its HTML5 native approach, makes it a formidable contender in today’s streaming environment.

Low-Latency HLS and CMAF for DASH

These cutting-edge technologies combine the benefits of incumbents like HLS and MPEG-DASH with ultra-fast delivery times. While once at the forefront of latency innovation, their adoption means that getting the most out of them may require significant back-end support.

The future of low latency in live streaming

The future of low latency streaming is poised for significant advancements, driven by emerging technologies and growing demand for real-time interactions. As the world becomes increasingly connected, the expectation for instant access to live content is becoming the norm, pushing the boundaries of current streaming capabilities. Innovations in 5G technology, edge computing, and more efficient video compression algorithms are at the forefront of this evolution.

These technologies promise to drastically reduce latency, making near-instantaneous streaming a reality. This will not only enhance experiences in entertainment and iGaming but also unlock new possibilities in remote education, telemedicine, and live event coverage, where immediacy is crucial.

Furthermore, the integration of artificial intelligence (AI) and machine learning (ML) into streaming networks will play a pivotal role in optimizing the delivery of live video content. By predicting bandwidth fluctuations and viewer preferences, AI can dynamically adjust stream quality, ensuring smooth playback without buffering, even in ultra-low latency scenarios.

Conclusion

Mastering video latency is akin to mastering time itself—at least within the world of live streaming. With the knowledge and strategies outlined in this guide, content creators and broadcasters are better equipped to tackle latency head-on, delivering seamless live experiences that captivate and engage audiences worldwide.

By understanding the nuances of video latency and applying best practices to mitigate its impact, the streaming world can continue to deliver immersive, real-time experiences that draw viewers closer than ever to the heart of the action.